Artificial intelligence unpredictably impacts radiologist performance

AI improves performance for some radiologists, but not others, raising questions about a one-size-fits-all integration approach for the tools in healthcare.

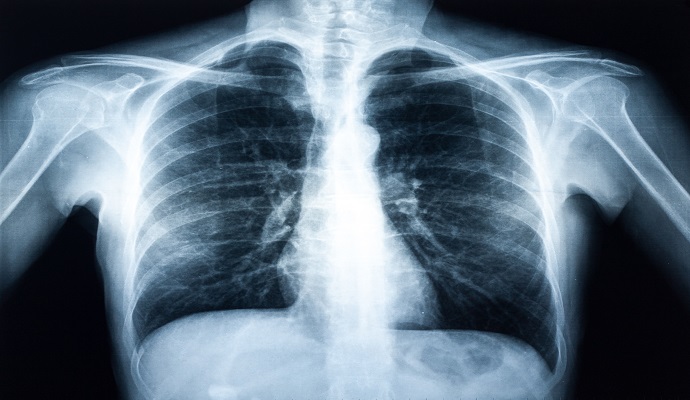

Source: Getty Images

- Researchers from Harvard Medical School (HMS), the Massachusetts Institute of Technology (MIT) and Stanford University have demonstrated that the use of artificial intelligence (AI)-based assistive tools improves performance for some radiologists, but worsens it for others, according to a study published this week in Nature Medicine.

Proponents of AI use in healthcare emphasize the technology’s potential to augment clinicians’ performance and decision-making. The research team indicated that while some research suggests that these tools can boost radiologists’ performance as a group, there are few studies examining the impact of AI on individual performance.

To investigate the effects of AI assistance, the researchers evaluated the performance of 140 radiologists across 15 chest X-ray diagnostic tasks on a set of 324 patient cases with 15 pathologies. Each radiologist was assessed in terms of their ability to correctly identify clinically relevant abnormalities both with and without the use of AI.

The results showed that the impact of AI was inconsistent, varying significantly from one radiologist to another. Performance worsened for some but improved for others.

The research team hypothesized that individual factors – such as area of specialty, years of practice and prior use of AI tools – could predict how an AI tool would affect a clinician’s performance. However, these factors failed to reliably predict the impact of AI.

The study findings also challenged other assumptions often made in discussions around healthcare AI.

Contrary to what the research team expected, radiologists who exhibited lower performance at baseline did not necessarily improve when given AI assistance: some achieved higher performance, but many worsened and others experienced no change.

However, lower-performing clinicians had lower performance with or without AI, while their higher-performing counterparts performed consistently well regardless of AI assistance.

The research further revealed that poorly performing AI tools negatively impacted radiologists’ diagnostic accuracy, and more accurate models boosted clinician performance.

The findings underscore the importance of testing and validating AI tools prior to deployment, the researchers noted. But they indicated that the results do not explain why and how AI tools impact clinician performance, necessitating further study.

“Our research reveals the nuanced and complex nature of machine-human interaction,” said study co-senior author Nikhil Agarwal, PhD, professor of economics at MIT, in a press release. “It highlights the need to understand the multitude of factors involved in this interplay and how they influence the ultimate diagnosis and care of patients.”

The research team stated that to ensure AI tools improve clinician performance, rather than harm it, developers and clinicians should work together to understand the factors that influence the human-AI interaction.

“[Researchers] should not look at radiologists as a uniform population and consider just the ‘average’ effect of AI on their performance,” stated co-senior author Pranav Rajpurkar, PhD, assistant professor of biomedical informatics in the Blavatnik Institute at HMS. “To maximize benefits and minimize harm, we need to personalize assistive AI systems.”

“Clinicians have different levels of expertise, experience, and decision-making styles, so ensuring that AI reflects this diversity is critical for targeted implementation,” said Feiyang “Kathy” Yu, a research associate at the Rajpurkar lab. “Individual factors and variation would be key in ensuring that AI advances rather than interferes with performance and, ultimately, with diagnosis.”

These questions about the impact of AI on clinician performance are part of a broader conversation around the role that these tools will play in patient care.

In November, leaders from Sentara Healthcare and UC San Diego Health discussed whether clinicians will become dependent on AI as the technology advances, alongside how health systems can tackle concerns about clinician over-reliance.